- 04 Dec 2023

- 3 Minutes to read

Experiment (A/B) Email Campaigns

- Updated on 04 Dec 2023

- 3 Minutes to read

Experiment campaigns help you A/B test your single email designs and optimize your campaign performance.

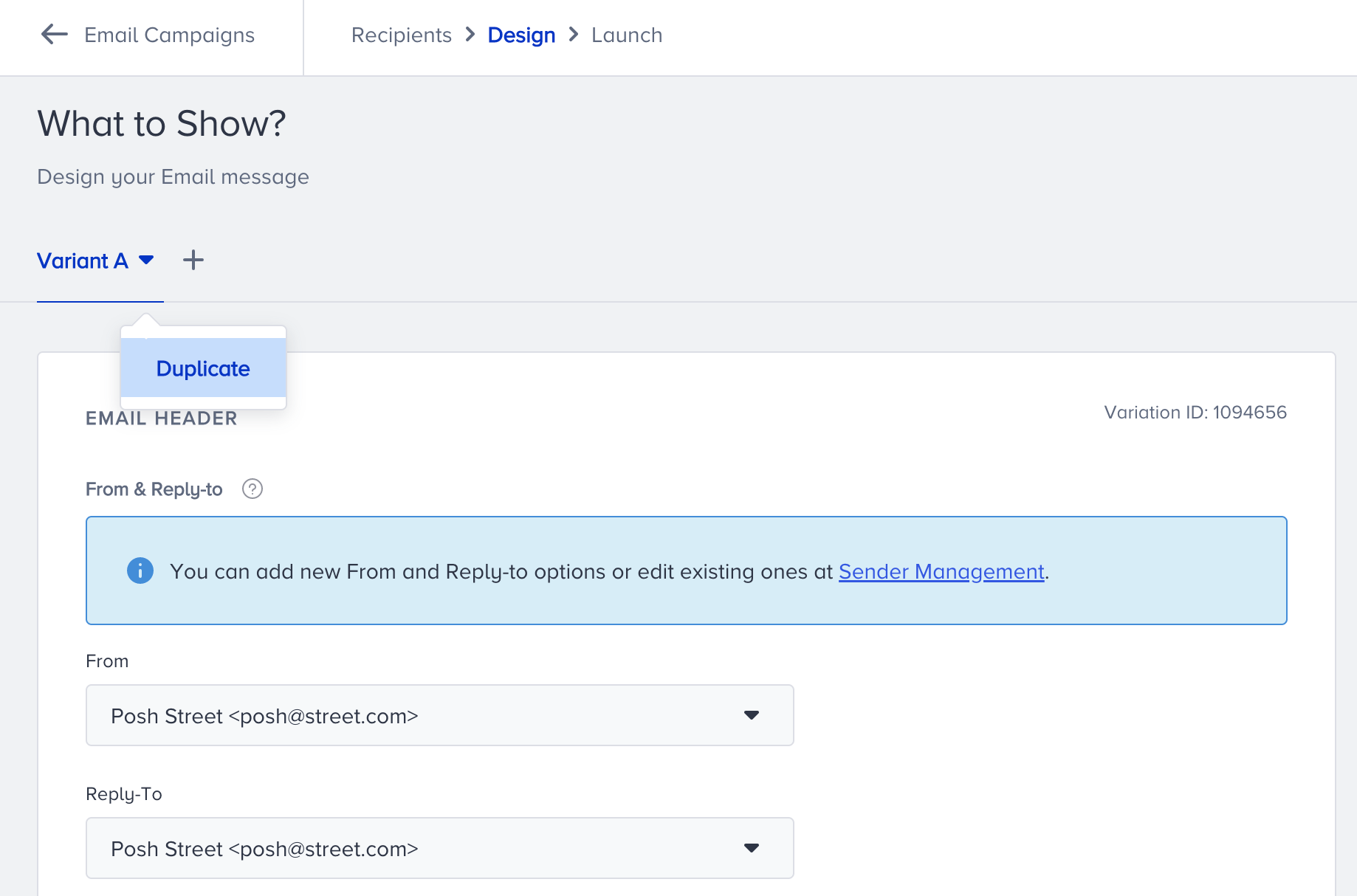

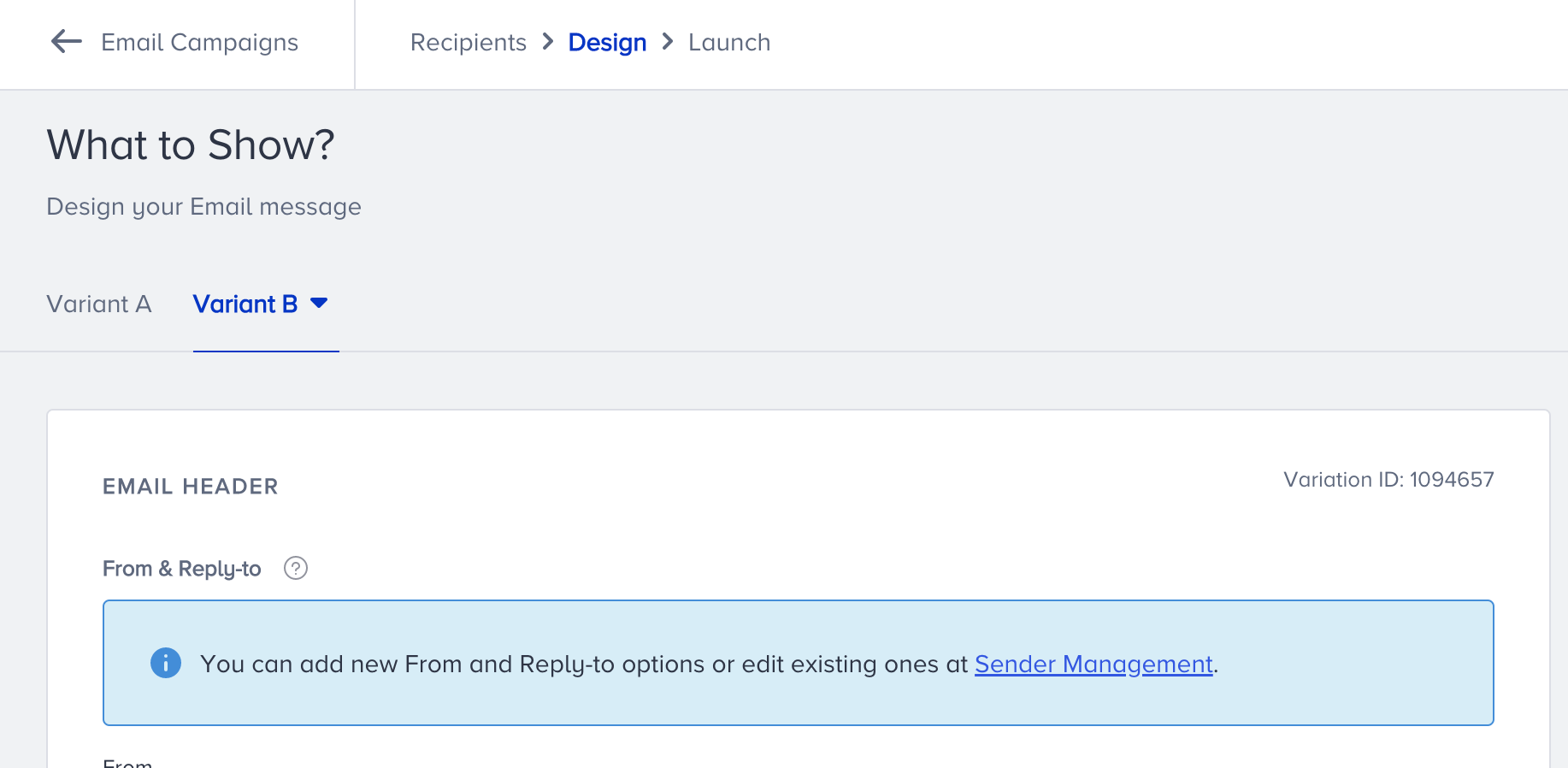

After configuring your audience on the Recipients step, you will proceed to the Design step where you create your email design. You will have Variant A already created in your campaign.

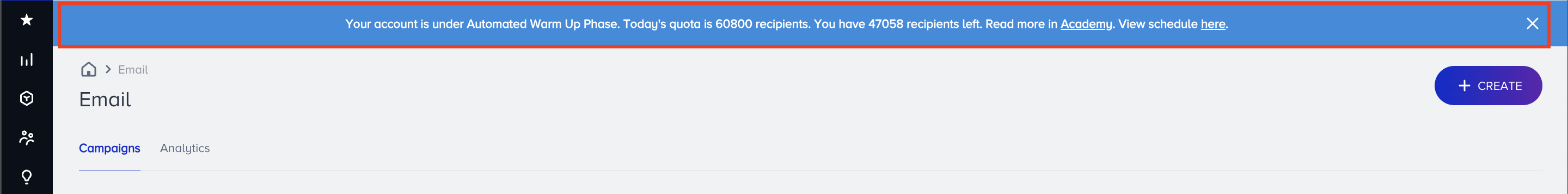

If your account is still in the warm-up phase, A/B testing won't be available in the email campaigns. It will be enabled once the warm-up period is completed. You will see the below toaster in your Inone panel if your account is still in the warm-up period.

To create an experiment campaign, you can:

- Click the Variant A to duplicate it.

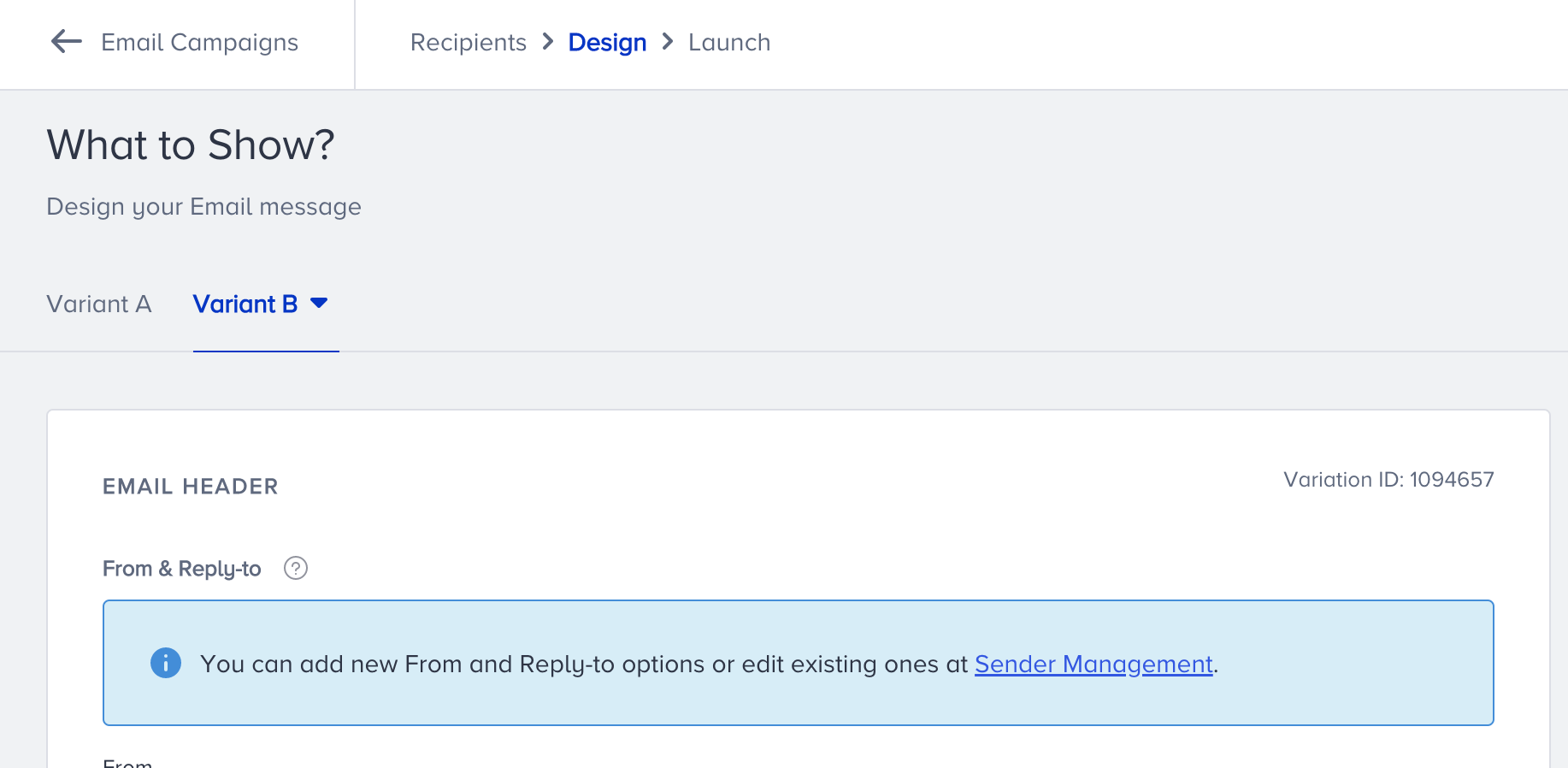

- Click the plus (+) button above to add Variant B. Then, Variant B will be listed right next to the Variant A.

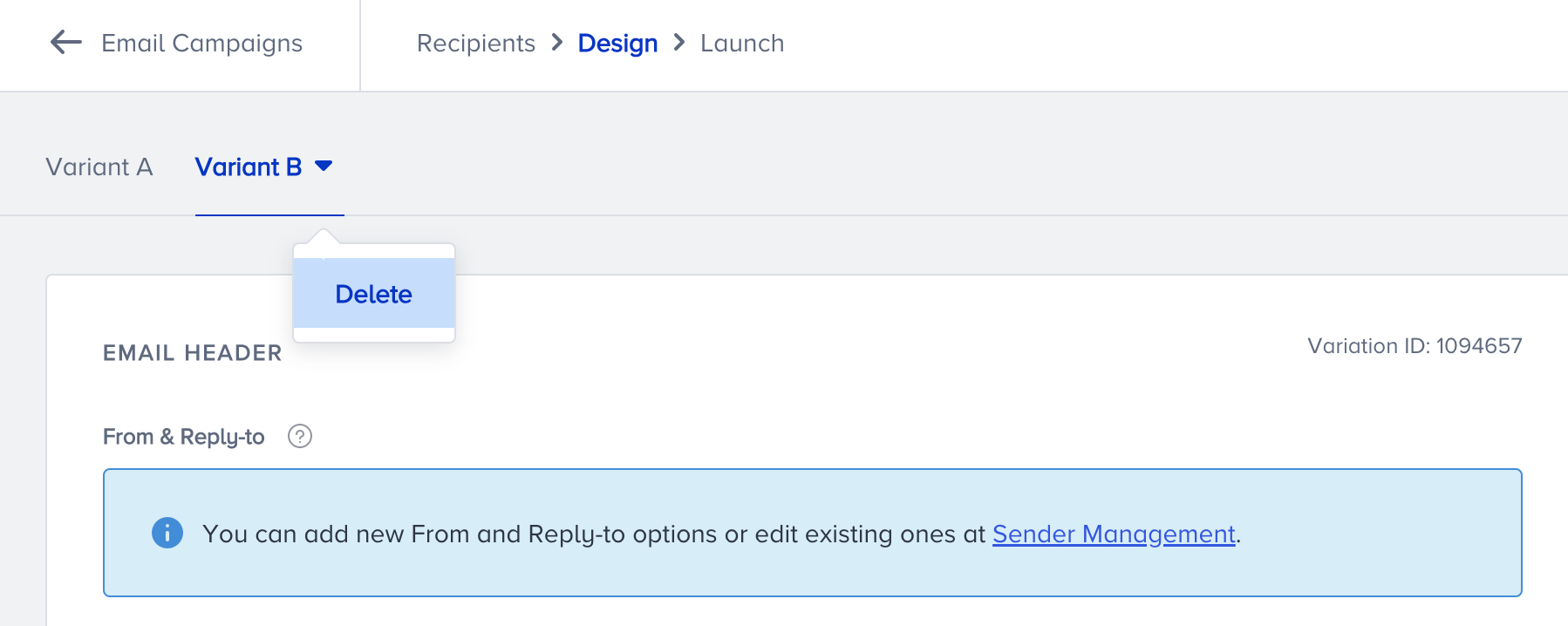

To delete this new variant, click Variant B > Delete. Deleting is not an available option for Variant A as one email design is mandatory for each campaign.

Deleting is not an available option for Variant A as one email design is mandatory for each campaign.

With Variant B, you can A/B test the following items differently for your experiment campaign:

- From address

- Reply-to address

- Preheader

- Subject line

- Mail design

- UTM parameters

How does it work?

Once you complete designing your email, you should configure its launch settings. When you add a second variant on your email campaign, you can complete the experiment campaign configurations on the Launch step. A/B test settings allow you to set the size of your testing pool and test duration.

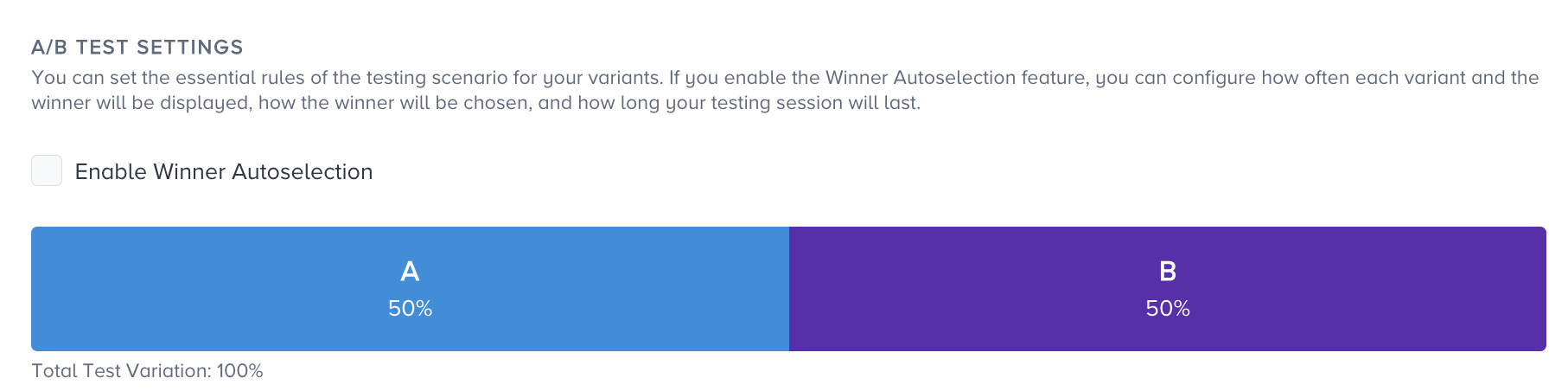

50/50 Testing

50/50 is allocated to your experiment campaign by default unless you enable the winner autoselection to allocate the percentage manually.

You can see the results of the test for each metric separately.

Enable Winner Autoselection

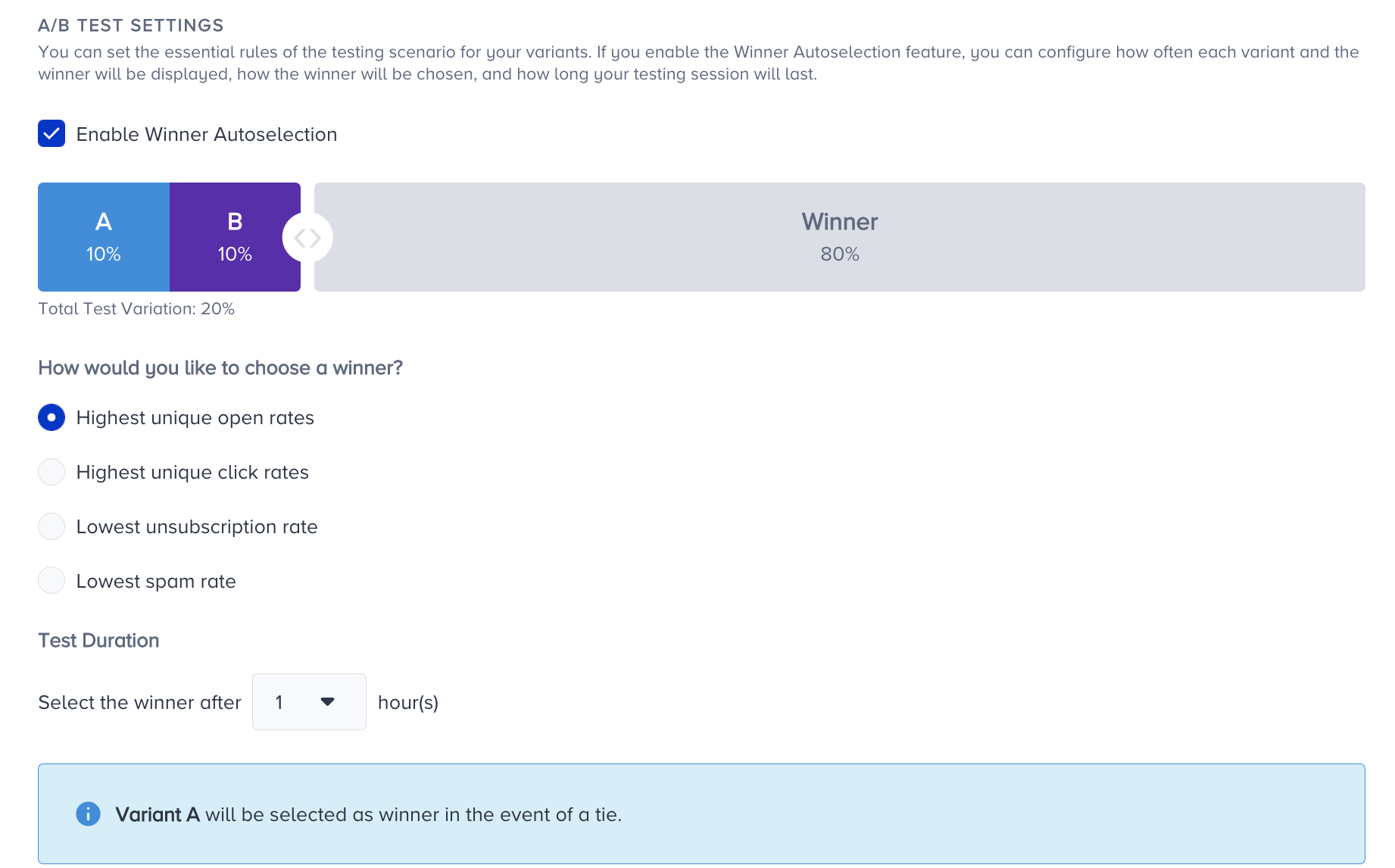

Once you enable the winner autoselection, you can drag the group allocations to determine the percentage of subscribers that will be targeted as the test pool.

Each variant can have a percentage of minimum 10% and maximum 45%. Your subscribers will be distributed to the groups randomly.

The winner variant will be autoselected based on one of the following methods as per your selection:

- Highest unique open rates

- Highest unique click rates

- Lowest unsubscription rate

- Lowest spam rate

Test Duration

With the Test Duration, you can set a duration after which your campaign will have a winner. You can set it between 1-24 hours.

Let's have an example to have a better understanding of the A/B test settings. You allocate 20% to both variant A and B, choose the "highest click rates" as your winner method, and set a 1-hour test duration.

Suppose that you have 100 recipients. Variant A and B will be assigned with random 20 subscribers each. You will send your email campaign to these subscribers and observe their engagement for 1 hour. After 1 hour, the system will assess the unique click rate of each variant. If variant B has a higher unique click rate, it will be sent to the rest of the recipients, which makes 60 recipients with the recipients of variant A and B subtracted.

How should you evaluate the campaign performance?

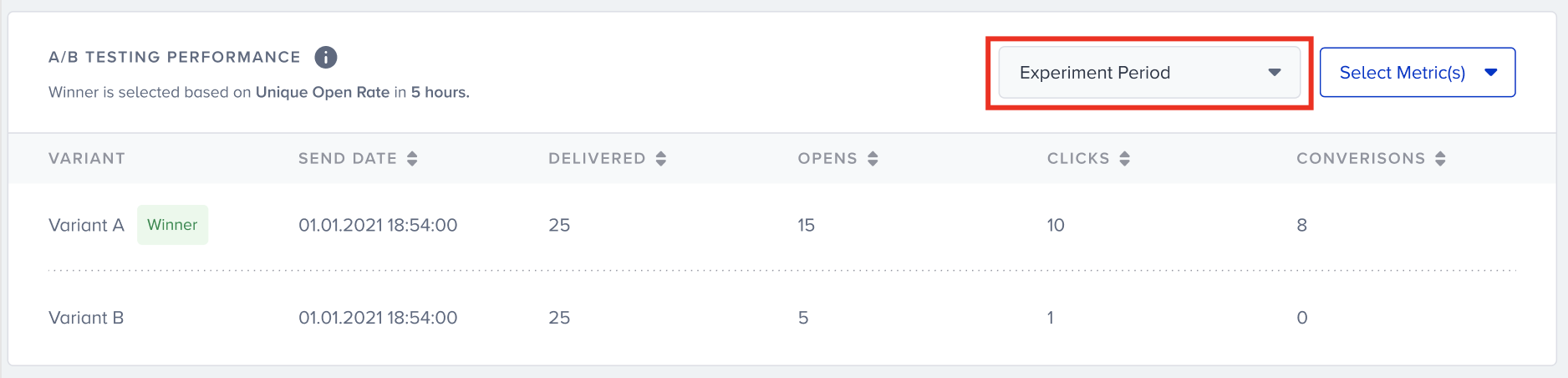

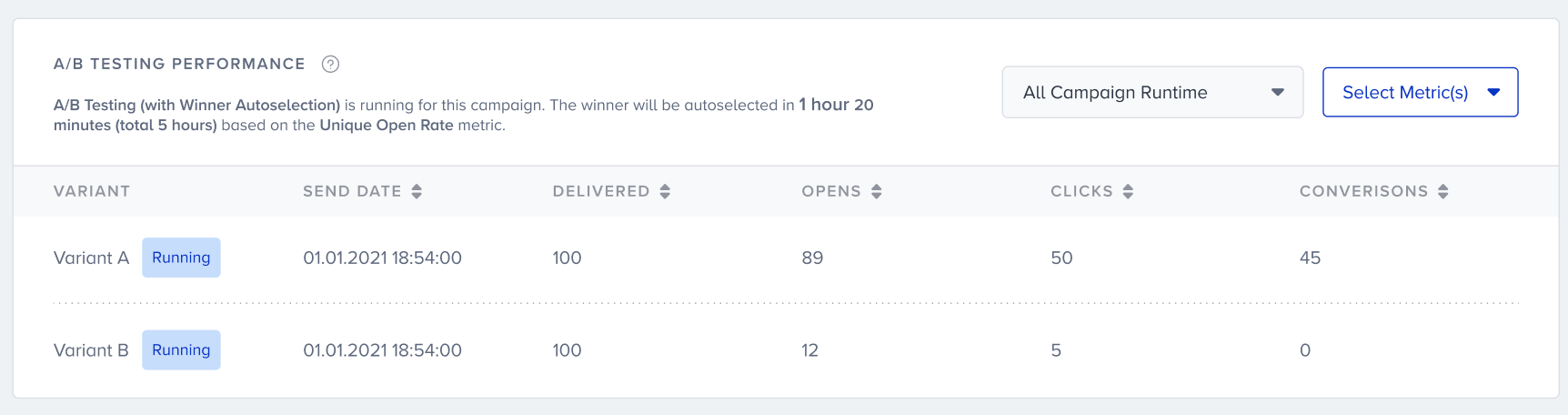

You can use the campaign analytics to evaluate to performance of your experiment campaigns during the experiment period. You can easily see which metrics your experiment is based on.

On top of the page, you can see the overall statistics. These metrics will cover both testing period and post-test period. On the bottom of the page, you can see a detailed version of the variant statistics.

You can see the performance of both variants when they are in the Running status, and the winner variant. To evaluate your campaign in depth, you can choose the metrics you want to see in the variant comparison table.

The experiment period is disabled for 50/50 campaigns as there is no additional experiment for 50/50 testing.